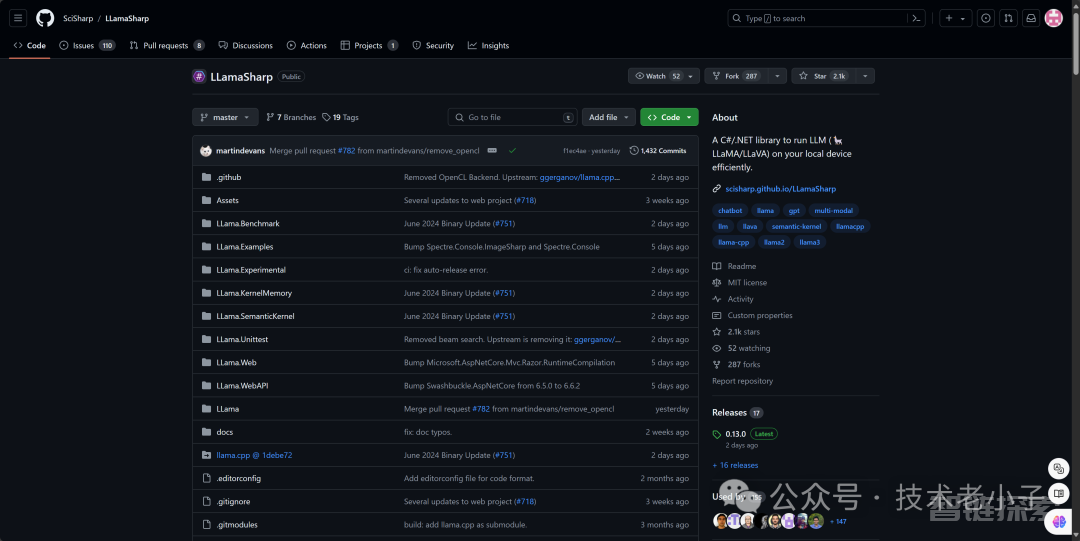

LLamaSharp 是一个跨平台库,用于在本地设备上运行 LLaMA/LLaVA 模型(以及其他模型)。基于 llama.cpp,LLamaSharp 在 CPU 和 GPU 上的推理都非常高效。通过高级 API 和 RAG 支持,您可以方便地在应用程序中部署大型语言模型(LLM)。

GitHub 地址

https://github.com/SciSharp/LLamaSharp1.

图片

图片

下载代码:

git clone https://github.com/SciSharp/LLamaSharp.git1.

快速开始

安装

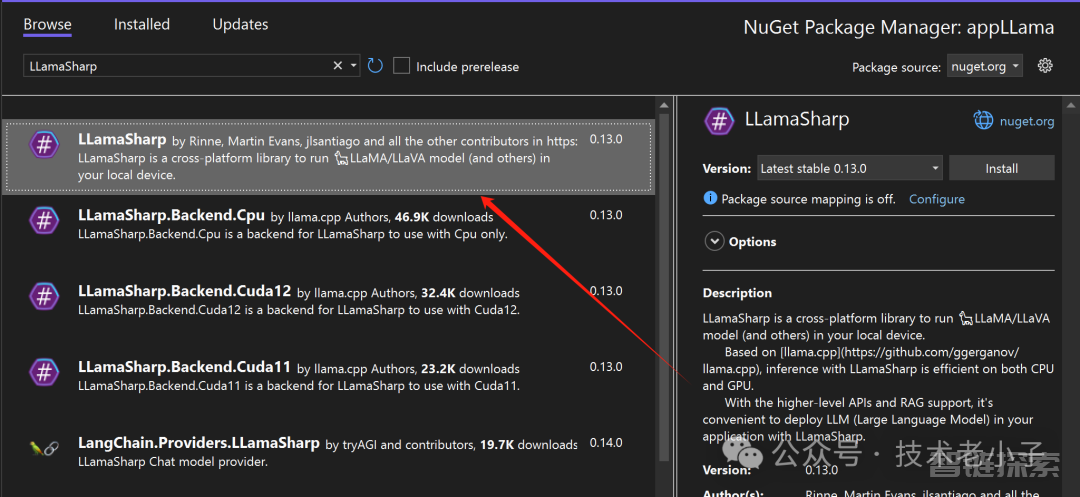

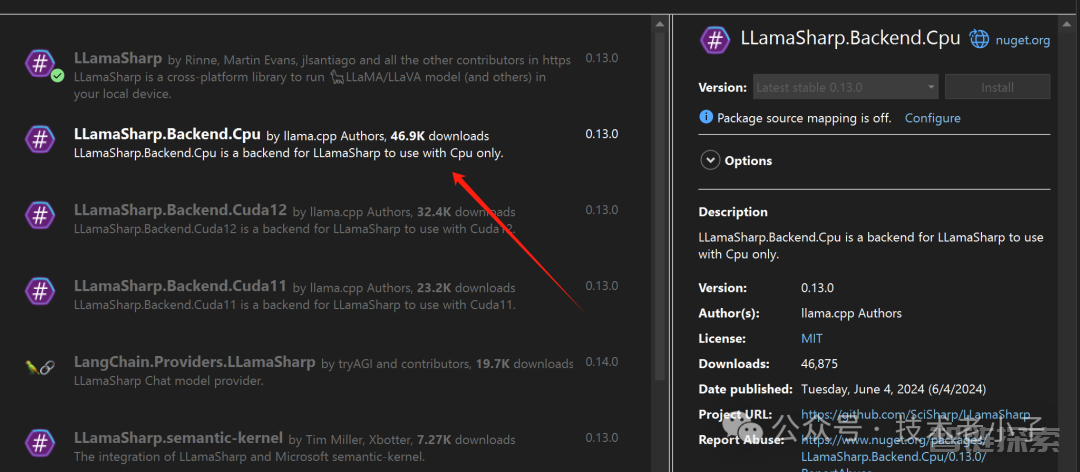

为了获得高性能,LLamaSharp 与从 C++ 编译的本地库交互,这些库称为 backends。我们为 Windows、Linux 和 Mac 提供了 CPU、CUDA、Metal 和 OpenCL 的后端包。您不需要编译任何 C++ 代码,只需安装后端包即可。

安装 LLamaSharp 包:

PM> Install-Package LLamaSharp1.

图片

图片

安装一个或多个后端包,或使用自编译的后端:

LLamaSharp.Backend.Cpu: 适用于 Windows、Linux 和 Mac 的纯 CPU 后端。支持 Mac 的 Metal (GPU)。

LLamaSharp.Backend.Cuda11: 适用于 Windows 和 Linux 的 CUDA 11 后端。

LLamaSharp.Backend.Cuda12: 适用于 Windows 和 Linux 的 CUDA 12 后端。

LLamaSharp.Backend.OpenCL: 适用于 Windows 和 Linux 的 OpenCL 后端。

(可选)对于 Microsoft semantic-kernel 集成,安装 LLamaSharp.semantic-kernel 包。

(可选)要启用 RAG 支持,安装 LLamaSharp.kernel-memory 包(该包仅支持 net6.0 或更高版本),该包基于 Microsoft kernel-memory 集成。

模型准备

LLamaSharp 使用 GGUF 格式的模型文件,可以从 PyTorch 格式(.pth)和 Huggingface 格式(.bin)转换而来。获取 GGUF 文件有两种方式:

在 Huggingface 搜索模型名称 + 'gguf',找到已经转换好的模型文件。

自行将 PyTorch 或 Huggingface 格式转换为 GGUF 格式。请按照 llama.cpp readme 中的说明使用 Python 脚本进行转换。

一般来说,我们推荐下载带有量化的模型,因为它显著减少了所需的内存大小,同时对生成质量的影响很小。

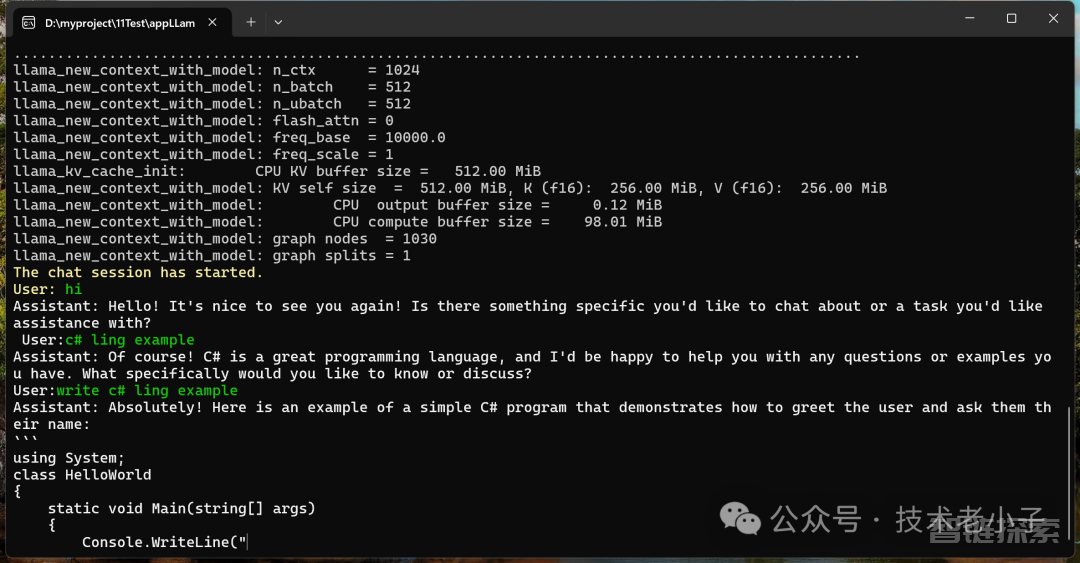

简单对话

LLamaSharp 提供了一个简单的控制台演示,展示了如何使用该库进行推理。以下是一个基本示例:

图片

图片

using LLama.Common;using LLama;namespace appLLama

{

internal class Program

{

static void Main(string[] args)

{

Chart();

}

static async Task Chart()

{

string modelPath = @"E:\Models\llama-2-7b-chat.Q4_K_M.gguf"; // change it to your own model path.

var parameters = new ModelParams(modelPath)

{

ContextSize = 1024, // The longest length of chat as memory.

GpuLayerCount = 5 // How many layers to offload to GPU. Please adjust it according to your GPU memory.

};

using var model = LLamaWeights.LoadFromFile(parameters);

using var context = model.CreateContext(parameters);

var executor = new InteractiveExecutor(context);

// Add chat histories as prompt to tell AI how to act.

var chatHistory = new ChatHistory();

chatHistory.AddMessage(AuthorRole.System, "Transcript of a dialog, where the User interacts with an Assistant named Bob. Bob is helpful, kind, honest, good at writing, and never fails to answer the User's requests immediately and with precision.");

chatHistory.AddMessage(AuthorRole.User, "Hello, Bob.");

chatHistory.AddMessage(AuthorRole.Assistant, "Hello. How may I help you today?");

ChatSession session = new(executor, chatHistory);

InferenceParams inferenceParams = new InferenceParams()

{

MaxTokens = 256, // No more than 256 tokens should appear in answer. Remove it if antiprompt is enough for control.

AntiPrompts = new List<string> { "User:" } // Stop generation once antiprompts appear.

};

Console.ForegroundColor = ConsoleColor.Yellow;

Console.Write("The chat session has started.\nUser: ");

Console.ForegroundColor = ConsoleColor.Green;

string userInput = Console.ReadLine() ?? "";

while (userInput != "exit")

{

await foreach ( // Generate the response streamingly.

var text

in session.ChatAsync(

new ChatHistory.Message(AuthorRole.User, userInput),

inferenceParams))

{

Console.ForegroundColor = ConsoleColor.White;

Console.Write(text);

}

Console.ForegroundColor = ConsoleColor.Green;

userInput = Console.ReadLine() ?? "";

}

}

}

}1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.51.52.53.54.55.56.57.58.59.60.61.62.63.64.65.66.67.68.69.模型路径与参数设置:指定模型路径,以及上下文的大小和 GPU 层的数量。

加载模型并创建上下文:从文件中加载模型,并使用参数初始化上下文。

执行器与对话历史记录:定义一个 InteractiveExecutor,并设置初始的对话历史,包括系统消息和用户与助手的初始对话。

会话与推理参数:建立对话会话 ChatSession,设置推理参数,包括最大 token 数和反提示语。

用户输入与生成回复:开始聊天会话并处理用户输入,使用异步方法流式地生成助手的回复,并根据反提示语停止生成。

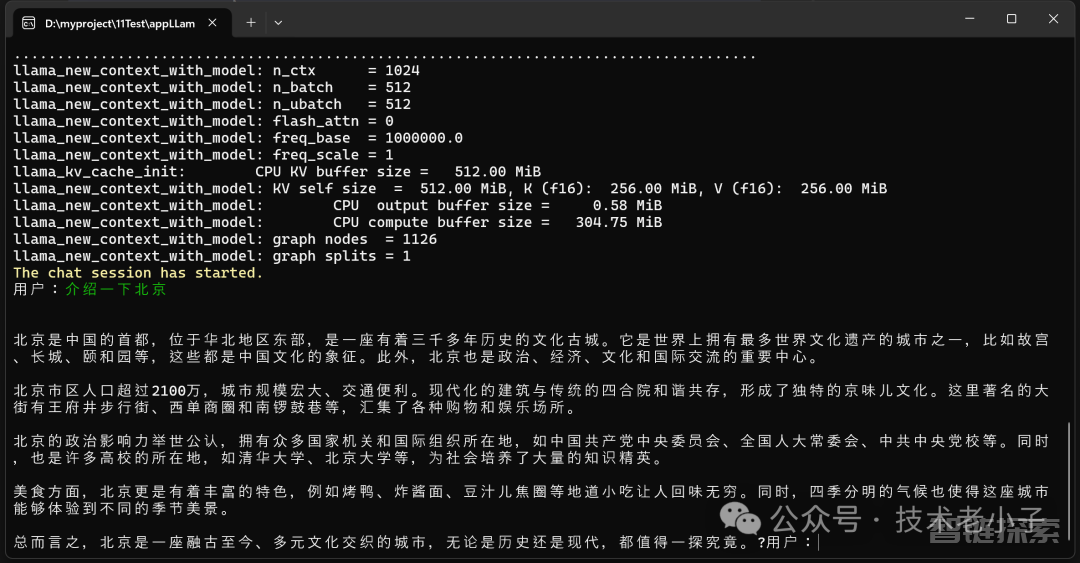

图片

图片

你会发现中文支持不太好,即使用了千问的量化库。

中文处理官方例子

我这换成了千问的库:

using LLama.Common;using LLama;using System.Text;namespace appLLama

{

internal class Program

{

static void Main(string[] args)

{ // Register provider for GB2312 encoding

Encoding.RegisterProvider(CodePagesEncodingProvider.Instance);

Run();

}

private static string ConvertEncoding(string input, Encoding original, Encoding target)

{

byte[] bytes = original.GetBytes(input);

var convertedBytes = Encoding.Convert(original, target, bytes);

return target.GetString(convertedBytes);

} public static async Task Run()

{ // Register provider for GB2312 encoding

Encoding.RegisterProvider(CodePagesEncodingProvider.Instance);

Console.ForegroundColor = ConsoleColor.Yellow;

Console.WriteLine("This example shows how to use Chinese with gb2312 encoding, which is common in windows. It's recommended" +

" to use https://huggingface.co/hfl/chinese-alpaca-2-7b-gguf/blob/main/ggml-model-q5_0.gguf, which has been verified by LLamaSharp developers.");

Console.ForegroundColor = ConsoleColor.White;

string modelPath = @"E:\LMModels\ay\Repository\qwen1_5-7b-chat-q8_0.gguf";// @"E:\Models\llama-2-7b-chat.Q4_K_M.gguf";

var parameters = new ModelParams(modelPath)

{

ContextSize = 1024,

Seed = 1337,

GpuLayerCount = 5,

Encoding = Encoding.UTF8

};

using var model = LLamaWeights.LoadFromFile(parameters);

using var context = model.CreateContext(parameters);

var executor = new InteractiveExecutor(context);

ChatSession session;

ChatHistory chatHistory = new ChatHistory();

session = new ChatSession(executor, chatHistory);

session

.WithHistoryTransform(new LLamaTransforms.DefaultHistoryTransform());

InferenceParams inferenceParams = new InferenceParams()

{

Temperature = 0.9f,

AntiPrompts = new List<string> { "用户:" }

};

Console.ForegroundColor = ConsoleColor.Yellow;

Console.WriteLine("The chat session has started.");

// show the prompt

Console.ForegroundColor = ConsoleColor.White;

Console.Write("用户:");

Console.ForegroundColor = ConsoleColor.Green;

string userInput = Console.ReadLine() ?? "";

while (userInput != "exit")

{ // Convert the encoding from gb2312 to utf8 for the language model

// and later saving to the history json file.

userInput = ConvertEncoding(userInput, Encoding.GetEncoding("gb2312"), Encoding.UTF8);

if (userInput == "save")

{ session.SaveSession("chat-with-kunkun-chinese");

Console.ForegroundColor = ConsoleColor.Yellow;

Console.WriteLine("Session saved.");

} else if (userInput == "regenerate")

{

Console.ForegroundColor = ConsoleColor.Yellow;

Console.WriteLine("Regenerating last response ...");

await foreach (

var text

in session.RegenerateAssistantMessageAsync(

inferenceParams))

{

Console.ForegroundColor = ConsoleColor.White;

// Convert the encoding from utf8 to gb2312 for the console output.

Console.Write(ConvertEncoding(text, Encoding.UTF8, Encoding.GetEncoding("gb2312")));

}

} else

{

await foreach (

var text

in session.ChatAsync(

new ChatHistory.Message(AuthorRole.User, userInput),

inferenceParams))

{

Console.ForegroundColor = ConsoleColor.White;

Console.Write(text);

}

}

Console.ForegroundColor = ConsoleColor.Green;

userInput = Console.ReadLine() ?? "";

Console.ForegroundColor = ConsoleColor.White;

}

}

}

}1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.51.52.53.54.55.56.57.58.59.60.61.62.63.64.65.66.67.68.69.70.71.72.73.74.75.76.77.78.79.80.81.82.83.84.85.86.87.88.89.90.91.92.93.94.95.96.97.98.99.100.101.102.103.104.105.106.107.108.109.110.111.112.113.114.115.116.117.118.119.120.121.122.123.124.125.126.127.128.129.130.131.132.133.134.135. 图片

图片

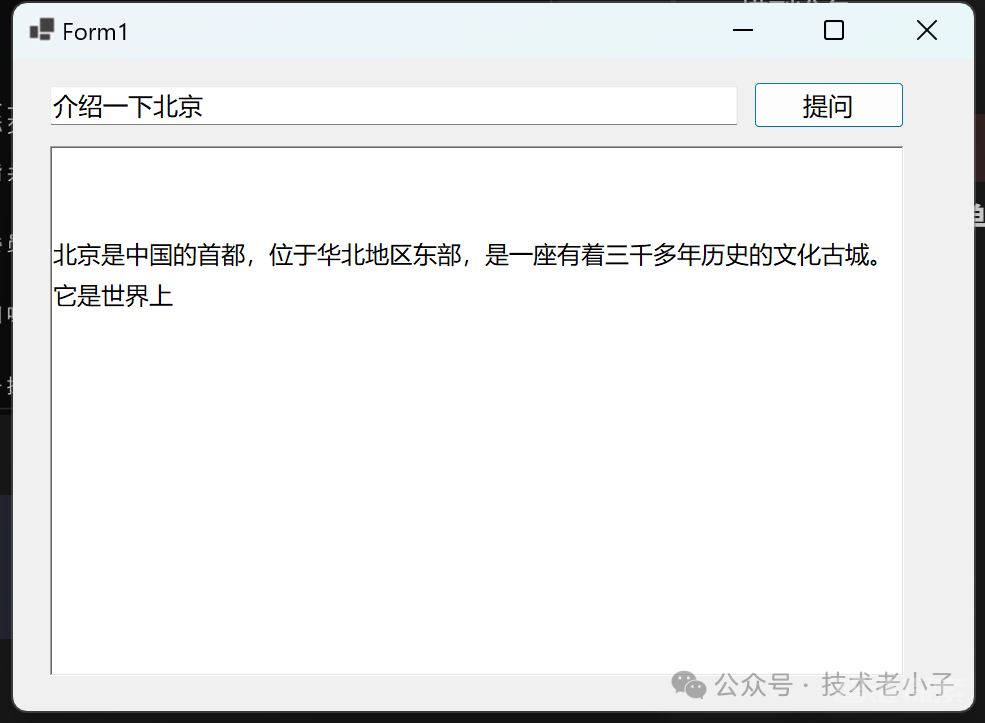

Winform写 一个简单例子

图片

图片

Chat类:

public class Chat

{

ChatSession session;

InferenceParams inferenceParams = new InferenceParams()

{

Temperature = 0.9f,

AntiPrompts = new List<string> { "用户:" }

};

private string ConvertEncoding(string input, Encoding original, Encoding target)

{

byte[] bytes = original.GetBytes(input);

var convertedBytes = Encoding.Convert(original, target, bytes);

return target.GetString(convertedBytes);

} public void Init()

{ // Register provider for GB2312 encoding

Encoding.RegisterProvider(CodePagesEncodingProvider.Instance);

Console.ForegroundColor = ConsoleColor.Yellow;

Console.WriteLine("This example shows how to use Chinese with gb2312 encoding, which is common in windows. It's recommended" +

" to use https://huggingface.co/hfl/chinese-alpaca-2-7b-gguf/blob/main/ggml-model-q5_0.gguf, which has been verified by LLamaSharp developers.");

Console.ForegroundColor = ConsoleColor.White;

string modelPath = @"E:\LMModels\ay\Repository\qwen1_5-7b-chat-q8_0.gguf";// @"E:\Models\llama-2-7b-chat.Q4_K_M.gguf";

var parameters = new ModelParams(modelPath)

{

ContextSize = 1024,

Seed = 1337,

GpuLayerCount = 5,

Encoding = Encoding.UTF8

};

var model = LLamaWeights.LoadFromFile(parameters);

var context = model.CreateContext(parameters);

var executor = new InteractiveExecutor(context);

var chatHistory = new ChatHistory();

session = new ChatSession(executor, chatHistory);

session

.WithHistoryTransform(new LLamaTransforms.DefaultHistoryTransform());

} public async Task Run(string userInput,Action<string> callback)

{ while (userInput != "exit")

{

userInput = ConvertEncoding(userInput, Encoding.GetEncoding("gb2312"), Encoding.UTF8);

if (userInput == "save")

{ session.SaveSession("chat-with-kunkun-chinese");

} else if (userInput == "regenerate")

{

await foreach (

var text

in session.RegenerateAssistantMessageAsync(

inferenceParams))

{

callback(ConvertEncoding(text, Encoding.UTF8, Encoding.GetEncoding("gb2312")));

}

} else

{

await foreach (

var text

in session.ChatAsync(

new ChatHistory.Message(AuthorRole.User, userInput),

inferenceParams))

{

callback(text);

}

}

userInput = "";

}

}

}1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.51.52.53.54.55.56.57.58.59.60.61.62.63.64.65.66.67.68.69.70.71.72.73.74.75.76.77.78.79.80.81.82.83.84.85.86.87.88.89.90.91.92.93.94.95.96.Form1界面事件:

public partial class Form1 : Form

{

Chat chat = new Chat();

public Form1()

{

InitializeComponent();

Encoding.RegisterProvider(CodePagesEncodingProvider.Instance);

chat.Init();

}

private void btnSend_Click(object sender, EventArgs e)

{

var call = new Action<string>(x =>

{

this.Invoke(() =>

{

txtLog.AppendText(x);

});

});

//chat.Run(txtMsg.Text, call);

Task.Run(() =>

{

chat.Run(txtMsg.Text, call);

});

}

}1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.更新例子可以去官网上看,写的比较专业。

https://scisharp.github.io/LLamaSharp/0.13.0/

还没有评论,来说两句吧...